When people talk about the artificial intelligence revolution, the focus is usually on software. We hear about the latest Large Language Models (LLMs), Python code, or the ethics of generative algorithms. Even the best software still needs physical hardware to actually work.

The real driving force behind the AI revolution is not just code. It is the silicon chips that make everything possible.

In 2026, the symbiotic relationship between software and hardware will have become undeniable. Industry analysts forecast that state-of-the-art AI accelerators will exceed 100 tera operations per second per watt (TOPS/Watt), powering models with over 1 trillion parameters and enabling real-time inference in both data centers and edge devices. While software provides the instructions, AI hardware development provides the brute-force processing power required to compute billions of parameters in milliseconds.

We have moved past the era where traditional CPUs could handle the workload. We are now firmly in the age of specialized accelerators, where the architecture of a chip defines the capabilities of the artificial intelligence it powers.

This guide examines the hardware that powers the industry, from large data centers to tiny NPUs in smartphones, and shows how fields like healthcare are driving new advances in chip technology.

What is AI Hardware Development?

AI hardware development is all about creating computer chips optimized for AI workloads. Unlike general-purpose CPUs that handle a variety of sequential tasks, AI computing requires massive parallel processing power.

The move to specialized, parallel hardware was not random. For years, general-purpose processors improved thanks to Dennard scaling and Moore’s Law, which made each new generation faster.

But as these improvements slowed and physical limits emerged, industries had to find new ways to accelerate computing. The need to process large volumes of AI data pushed companies to use chips designed for parallel processing rather than traditional CPUs.

Think of it this way: a standard CPU is like a single, brilliant mathematician solving complex problems one after another. The hardware for AI development, especially GPUs and accelerators, is more like an army of students solving thousands of simple math problems simultaneously.

For example, a modern CPU cluster in a data center might reach about 1 teraflop per second. A GPU cluster of the same size can deliver over 100 teraflops per second, which is a huge difference. This significant speed increase makes parallel hardware essential for training deep learning models that use matrix multiplication on large datasets.

Here are some AI hardware development examples of how this plays out in the real world:

- A GPU in a data center is training a large language model.

- An ASIC chip in a self-driving car processes sensor data in real time.

- A low-power NPU in a smartphone enables features such as live translation.

The Need for Speed and Efficiency

Two main factors show how good AI hardware is: latency (how quickly it runs) and energy efficiency (how much work it performs per watt). For instance, virtual assistants need to respond in less than 10 milliseconds to feel instant, and the best AI accelerators now reach over 100 tera operations per second per watt. Setting clear targets for these numbers helps people make better choices.

- Reduced Latency: In applications like self-driving cars, real-time processing is essential. A car cannot wait even two seconds to tell a person from a mailbox. Special hardware makes these decisions in microseconds.

- Energy Efficiency: As AI models grow, they use more power. Today’s AI accelerators are built to do as many calculations as possible while using less electricity and producing less heat.

Key Types of AI Hardware (The “Big Three”)

Not all chips are the same. The right hardware depends on whether you are training a huge model or just running a simple chatbot on your phone.

GPUs were first developed for video game graphics, but their parallel architecture made them ideal for AI. NVIDIA leads this area, and its hardware is used for almost all major generative AI training.

But the GPU market is changing. AMD and Intel have made significant improvements, gaining ground with products such as AMD’s Instinct accelerators and Intel’s Data Center GPUs. While NVIDIA is still ahead, more types of GPUs are now being used for AI.

Google realized GPUs might not be efficient enough for their needs, so they created the TPU, a specialized chip designed for machine learning. TPUs are very fast at matrix math, making them ideal for training and running models in Google Cloud.

However, using TPUs usually means your AI work is tied to Google Cloud. This can make it harder to use other cloud platforms. Thinking about these trade-offs helps companies make better long-term hardware decisions.

If you have a smartphone from the last few years, it probably has an NPU. These special chips are built into devices to handle AI tasks like Face ID, live translation, or photo editing, all without sending your data to the cloud.

AI Hardware Use Cases

Each industry has its own hardware needs. Knowing these needs is important for picking the right setup.

1. Training Large Models

Training is the most demanding part of AI. It needs large groups of powerful GPUs, like NVIDIA’s Blackwell series, all connected with fast networks.

For example, training a large language model like GPT-4 uses over 1.2 million kilowatt-hours (kWh) of energy. That is about the same as what 120 U.S. homes or a small town use in a month. Data centers with these GPU clusters can use as much power as a small city.

2. Edge Computing

Edge AI handles data right on the device, at the edge of the network. Drones, factory robots, and self-driving cars need tough, low-power, and very fast hardware to work in real time.

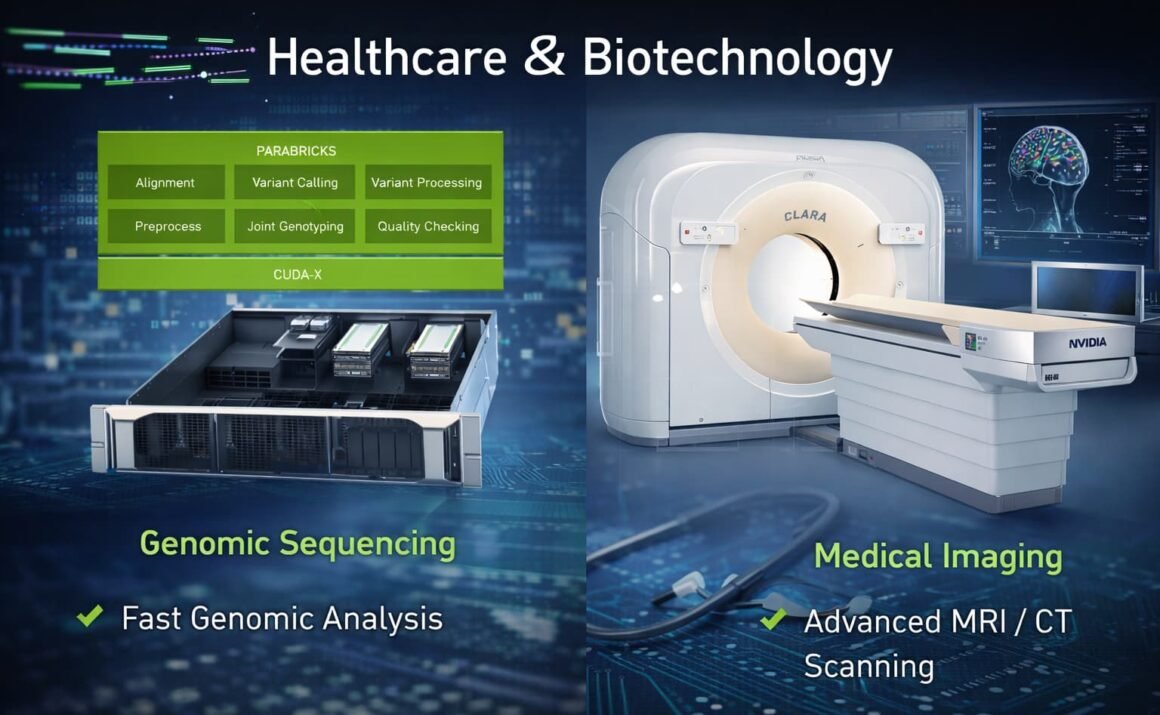

3. Healthcare & Biotechnology

AI hardware has its greatest impact in healthcare, where it must be not only fast but also precise and capable of handling large amounts of data.

- Genomic Sequencing: Mapping a human genome creates terabytes of data. Standard CPUs can take days to analyze it, but special platforms like NVIDIA Parabricks use GPUs to finish the job in less than an hour. This speed is vital for quickly diagnosing rare genetic disorders in newborns.

- Medical Imaging: AI is changing radiology. Tools like NVIDIA Clara enable AI to run directly inside MRI and CT scanners. These models can improve the quality of low-quality images, reduce radiation exposure, and spot problems in real time to help doctors. Here, hardware is not just for data—it helps save lives.

Buyer’s Logic: The Decision Matrix

Picking the right hardware can feel overwhelming. Whether you are a developer, a startup founder, or a hobbyist, this decision matrix can help you choose where to invest.

| If your task is… | You should prioritize… | Recommended Hardware Class |

| Learning / Small Projects | Cost-efficiency and accessibility | Consumer GPUs (e.g., NVIDIA RTX series) |

| Mobile App Integration | Low power consumption and on-device privacy | Integrated NPUs (Apple Neural Engine, Qualcomm Hexagon) |

| Medical Imaging / Genomics | High throughput and precision | Workstation GPUs (NVIDIA RTX 6000 Ada) or specialized Medical Edge devices |

| Large Scale Model Training | Maximum VRAM and interconnect speed | Enterprise Data Center Chips (NVIDIA H100, AMD MI300X, Google TPU) |

Best AI Hardware Development Tools

To use the power of these chips, you need a strong software stack. This is the layer that lets high-level code, such as Python, interact with the hardware. Here are some top AI hardware development tools that are used today:

The industry standard for parallel computing. This programming model allows developers to leverage NVIDIA GPUs for general-purpose processing. Its maturity and vast ecosystem are fundamental to NVIDIA’s dominant market lead.

A comprehensive toolkit designed to optimize deep learning models. It ensures efficient execution across Intel’s diverse hardware portfolio, ranging from standard CPUs to specialized AI accelerators.

An open-source software stack serving as a powerful alternative to CUDA. It is specifically designed for developers utilizing AMD’s Instinct accelerators to achieve high-performance computing goals.

Field-Programmable Gate Arrays are indispensable tools for hardware development. They allow engineers to rigorously test and refine chip designs before committing to expensive mass production cycles.

Industry Leaders: Top AI Hardware Companies

A handful of major companies lead the AI chip market:

| Company | Key Contribution |

| NVIDIA | Undisputed leader with the H100 and Blackwell B200. |

| AMD | A rising challenger in ai hardware companies with the MI300 series. |

| Leader in custom Cloud TPUs. | |

| Intel | Democratizing AI with Gaudi accelerators and Core Ultra processors. |

| HaaS Providers | Hardware as a Service companies like AWS and Azure allow developers to “rent” power they can’t afford to buy. |

Hardware as a Service (HaaS)

Let’s face the reality of 2026: a single enterprise-grade GPU can cost upwards of $30,000. For most startups, buying hardware is not feasible. This has solidified the Hardware-as-a-Service model. Providers like AWS (Amazon Web Services), Microsoft Azure, and Google Cloud allow developers to “rent” these powerful chips by the hour. This lowers the barrier to entry, allowing a student in a dorm room to train a model on the same hardware used by Fortune 500 companies.

Several companies specialize in AI hardware development services, pushing the boundaries of computing power. NVIDIA is a leader in designing GPUs optimized for AI workloads, enabling faster model training and inference. AMD is another key player, offering high-performance CPUs and GPUs tailored for AI applications.

Startups like Graphcore and Cerebras Systems are innovating with custom AI chips that deliver unparalleled speed and efficiency. These companies are driving advancements that make AI more accessible and scalable across industries.

Critical Challenges in the Industry

Even with all the progress, there are still challenges. For example, in 2021, a global chip shortage delayed manufacturing in many industries, from cars to electronics.

A major problem occurred when top GPU vendors experienced months-long delays in fulfilling cloud orders. This slowed the rollout of new AI features at big tech companies. These supply issues made companies delay launches, rethink their supply chains, and question just-in-time inventory methods.

Events like this show why it is important to plan for risks in AI hardware. No matter how advanced the technology is, real-world problems can slow down even the best plans.

Chips are running hotter than ever. Standard air cooling is no longer sufficient for top-tier AI hardware, forcing data centers to transition toward advanced liquid and immersion cooling solutions to prevent overheating.

Relying on a limited number of chip factories presents a significant global risk. Nations are now in a high-stakes race to establish local manufacturing hubs to satisfy the skyrocketing demand for specialized AI silicon.

“Green AI” is now a critical priority. Training massive models consumes immense energy and leaves a significant carbon footprint, prompting hardware makers to focus on maximizing performance-per-watt to mitigate environmental impact.

The Future of AI Computing

Looking back at the progress made in 2025, it is clear that artificial intelligence hardware is finally matching the potential of software. We are moving away from brute-force methods toward smarter, more efficient designs. Imagine if new neuromorphic chips could cut your energy use by 99 percent. Which of your projects would change the most? Now is the time to think about what you can try to get ready for this shift, and who on your team will lead the way.

At Tech News Health, we believe the next big step is Neuromorphic Computing. These chips are built to work like the human brain, using spiking neural networks to process data with much less energy. At the same time, Quantum AI promises to solve problems that regular computers cannot handle.

For businesses and developers, the message is simple: the hardware for AI development you choose now will determine how smart your applications become in the future. Whether you are working in healthcare or building the next great chatbot, the real power lies in the silicon. Stay tuned to our latest tech and health insights to stay ahead of this shift.

Leave a Reply